A Journey With Midjourney

Exploring an Idea With Midjourney

I haven’t seen anyone talk about what it’s like to try and work with Midjourney, or any of the other Image AIs. No-one has shown just how much work it takes to get from an idea, to the beautiful output we keep seeing.

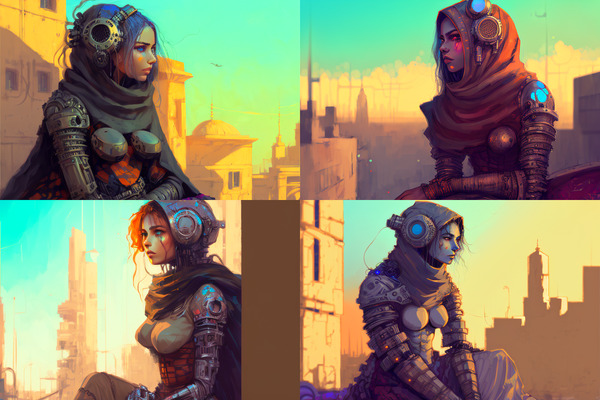

This post will take you through the journey, from a spark of colorful and strong Native American imagery, depressed cyborgs, to visions of Muslim women, in a dry and trying future.

I’ll explain my thoughts along the way, and how I crossed notable thresholds in the process.

Legal

Just in case anyone gets any ideas: The images in the document are all distributed under the Creative Commons Attribution-Non-Commercial-ShareAlike 3.0 license, with the exception of the first one which is copyright it’s author (moon.goat.616) and is used under Fair Use.

The Spark

Yesterday I saw a truly impressive image of a Native American person in the Community Feed.

This was created by user moon.goat.616. That link will only work if you’re a Midjourney subscriber.

Midjourney doesn’t let you directly recreate other people’s images, but it will let you see what prompt the entered into the system to generate it. Most of the time, copy-pasting their prompt will generate absolute garbage. This is because the pretty-pretty they generated was typically the result of many iterations and tweaks.

This doesn’t mean the prompt is useless. They’re incredibly useful, because you’ll discover new keywords that help guide the AI in a direction you want.

Every now and then, the prompt just works…

That was, really surprising. I was tempted to start working with that, but as a privileged white person I don’t feel particularly comfortable making representations of Native American women. There are so many things I can’t speak to with knowledge or authority.

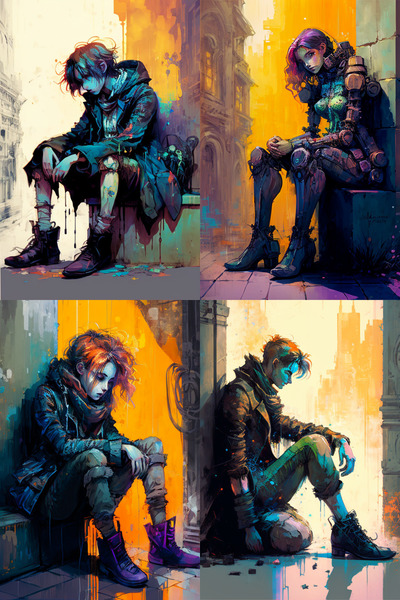

But the colors were so amazing. “What about a depressed android in those colors?” I thought.

So, extracted all the keywords, removed the stuff that pointed it towards Native American imagery (“tribal outcast”) and replaced it with “depressed android. sitting. slumped. against wall.”

Depressed looking? ✅

Android? kindof.

I was looking for less… human looking things.

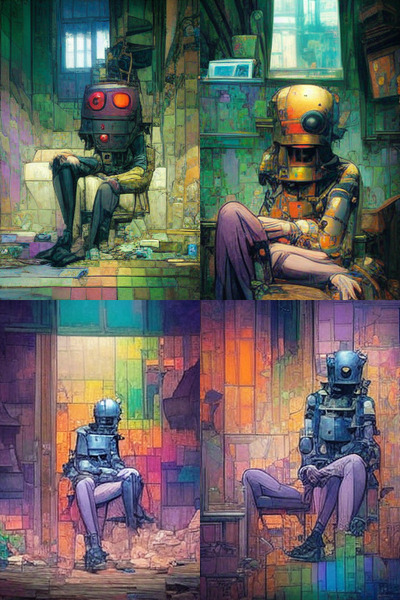

Maybe if I try “rubot” instead of “android”

Shit… that’s not how you spell “robot”.

Interesting but… really hard to parse what’s going on.

Back to “cyborg”, but I added “salvage” and “junk”. After a couple iterations I got this

Now we’re talking!

Depressed looking? ✅

Cyborg? ✅

A bit… too human looking. I was really hoping to generate images with boxy looking robots but I’m loving the color work, and there’s something about these women. I decide to see where I can take this.

It took about four simple iterations to get to that. There are a handful of other good images from that work too.

Grand total, I’ve spent about 20 minutes. It’s at this point I finally break free from this fascinating thing and go back to my actual task. I had only opened midjourney to find an example of something to show someone on discord.

…

That night, drained from fighting with buggy libraries and systems I decide to relax by playing around with Midjourney again.

I start again with 2 tasks in mind.

- I want those vibrant colors I started with. This stuff is great, but it’s muted in comparison.

- I want actual mechanical looking robots, not women with various doo-dads attached to them.

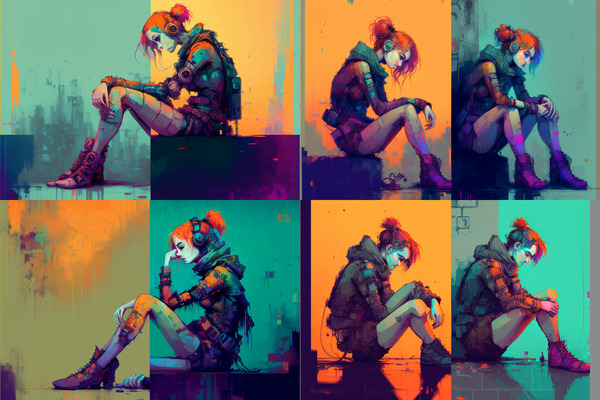

I start with the first task. Adding vibrancy.

I add “Intense color” to the prompt (it already had “vibrant colors”). And feed it one of the good close-ups to start with.

I go through four more iterations, selecting for color and face, and then I make a mistake.

I decide to feed it one of the past images as input, but I choose a 4-up image instead of a single image.

Cool! Not what I want, but man, look at those left two! That’s awesome.

I backtrack, and instead feed this into it as a guide.

And now I’ve got my vibrant colors

Alas, if you look closely you’ll note that I’ve lost all the detail. There are two other problems.

- they don’t look like androids at all

- my brain is raising warning flags about the future of those boobs.

A few iterations later and the boobs are definitely a problem. I really like this image though.

I should probably point out that Midjourney’s people have worked really hard to keep porn out of the system. You will almost never see a nipple in Midjourney art. I believe they trained something to find and get rid of nipples in the training material before before training on it.

I’m pretty sure she’d have nipples if not for that.

A few tweaks later on and I managed to get a version without the boob-windows in the outfit but rest of it just wasn’t as good.

You’ll also notice that the backgrounds have started to change. They looked like they were hinting at walls, and corners, so added in “distant cityscape” and that really helped. Keep an eye on the backgrounds as we progress.

There were a lot of boobs along the way so I also decide to explore what happens if i lean in to what it’s obviously trying to do. My thinking is if i try and guide it, instead of fighting it, we might get some interesting results.

We’re obviously far from our starting point of “robot” but I’m enjoying what’s coming out of it. So, “couture outfit” gets added to the mix. I’m thinking lots of “couture” fashion has dramatic boob things, but actually manage to cover the boobs.

A handful of iterations on that and I realize that I got what I asked for and I really don’t want it.

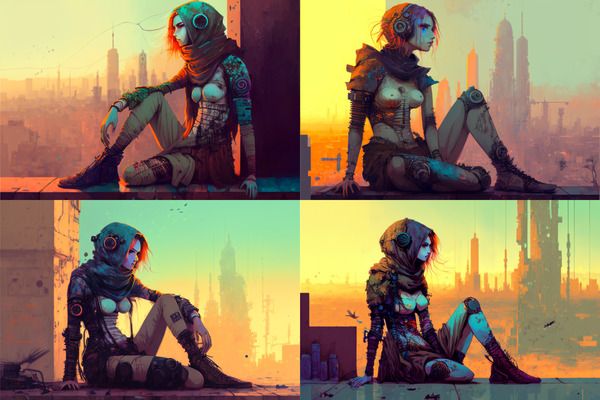

So, I backtrack, and keep feeding chosen pieces of output back into the system. I add in “4k. hdr.” to see if we can bring out a bit more realism.

This is… amazing.

I love, how the city is emerging from the background, and how the blue and orange are contrasting her against the sky.

Her hood gives me an idea though. Its got enough fabric that it could be a hijab that’s been let down. What if I add “Muslim” to the mix? Might that counter the boob factor too?

No. No it will not.

This plus future iterations I’m not going to show lead me to believe that in the future muslim women will decide to keep the hijab, but rebel against the male oppression by showing their boobs at every available opportunity.

Really though, it just means that the only thing Midjourney knows about muslim women is that they have covered heads.

BUT… the city!

Also, note that the color palate has changed. I can only assume that this is because a lot of the training material involving Muslims is from parts of the world that have a lot more desert.

I eventually get one with a great head, but the boobs have become ridiculous. Like… runway supermodel combined with eccentric designer stuff that… just… just no.

So, I edited an image, cropped it to just the head, and fed that in as source material plus the prompts.

My friends… the algorithm wants boobs.

But… I’m iterating. I’m combining. I’m tweaking… I’ve replaced “depressed” with “optomistic” and it hasn’t helped much. It isn’t until a while later that I realize that it’s spelled “optimistic” and thus the bad spelling was just being ignored. Also, it’s just not a great keyword. I didn’t want to go all the way to “smiling” or “happy”. I liked the vibe of these.

I add --no chest (“boob” is a restricted word) and try “metal chest plate”. The latter does nothing useful. The former helped get rid of some of the more egregious boob images.

we veer a little into the Anime too

From here on it’s just a matter of taking generated images and feeding them back into the system with the same keywords, to “restart” the process and focus it on specific aspects. I could have started mucking with the keywords to change things but I liked the direction it was going in.

I try to direct it more towards dark skin by using “tanned”. I try and avoid “dark skin” because it’ll quickly trend towards African look instead of middle-eastern.

There are a few other, nice images from this, but this journey has taken four and a half hours of work, and the generation of at least a hundred images. I need some sleep.

Things of note

Photorealism

It should be noted that with the exception of the first two images none of these are even remotely photo-realistic.

This is pretty normal in my experience. Getting something that looks “real” seems to be largely a question of how much content already exists in the world that involves that stuff, and looks real when it goes into the training system.

Note that we went from painterly, but very close to photo, to something almost impressionist in one step, and never really came back.

I’m confident that it’s possible to push this series towards photorealism, but that wasn’t my goal. It’d also take a lot of work.

Hands

The hands are… problematic. The hands are problematic in all the image AIs. If you see normal looking hands in an AI generated image it either means the person edited the image, or they were exceptionally lucky.

There were a lot of images along the way with floppy spaghetti arms, detached hands, and forty-gajillion fingers.

Creativity

I’ve heard a lot of comments that implied that creating images with AI was just a matter of creative problem solving around choosing words to use in prompts. Anyone who has used these systems knows that isn’t true. The creation of these images wasn’t a matter of me constantly tweaking and perfecting a set of words. The words at the start and the words at the end barely changed. I didn’t leave out any notable changes in the prompt, and the changes I did make were very minor.

Working with an AI is a more a matter of trying things until you find a style you like, and then encouraging it to go in a direction. It’s like working with a river. You can’t really control it, but you can encourage it to help you achieve a goal.

Sculpting marble is literally “just” a matter of choosing what pieces to cut off of the block. It’s “just” a piece of “creative problem solving”. You don’t need to be creative. You don’t need to be an artist. You just need to choose what pieces to remove.

If you believe that, then well… I hope you at least enjoyed the pictures.

Welcome to a new, and amazing, tool for artists.